Theory of Employee Engagement

Learn about the research behind our engagement construct.

We’ve run hundreds of thousands of surveys for organizations across Asia, with the mission of empowering leaders and human resource (HR) practitioners with a tool to lead change in their company culture.

Through our survey analytics platform, we can truly understand an intangible asset that many organizations tend to miss — engagement. The questions used in our platform’s recommended surveys have been considered through active research in organization science, which allowed us to build a baseline for understanding engagement and productivity in the workplace.

In this article, we cover the basics of how we conducted our research that powers the survey analytics platform.

Literature Review

In the research phase of our engagement platform, we carried out several qualitative and quantitative studies on the current literature on workplace commitment. Emergent data from our literature review was analysed using several statistical techniques, to scrutinise relationships between observed and latent variables.

We aimed to build a questionnaire that was a valid measure of the level of engagement within the organization. We also aimed for the engagement constructs to be highly correlated with actual business outcomes, and thus we discarded any articles about engagement unrelated to actionable insights.

First, we aimed to collect as many academic discussions on the topic and synthesise them into a framework for our engagement instrument. Our queries to the academic database focused on ideas of workplace commitment, human resource development and organisational development.

Research that was found to be uncorrelated with measurable actionable issues for management was removed, resulting in a final database consisting of over 500 peer-reviewed articles from various journals. Finally, we conducted several preliminary methods to arrange and sift through the various data sources.

Preliminary Analysis

The first method we employed was content analysis, a research method to gain systematic and objective means of quantifying any phenomena. This allows us to make replicable and valid inferences from research towards their context, building a representation of facts in a structure for a practical action guide. Through this analysis, we yielded a conceptual system to organise categories within engagement.

Concept mapping, a technique originally developed by Novak in 1984, was also used to develop a graphic representation of data points uncovered through content analysis. This allowed us to structure semantic connections between different ideas and to ascertain whether certain ideas or behaviours are antecedent or consequential. Through this graphic representation, we were able to present a conceptual model for engagement.

Question Bank

Additionally, we conducted a review of published survey instruments measuring employee engagement.

Through this review of the engagement constructs, we assembled a question bank of 198 items aimed at measuring engagement, after eliminating duplicates.

Instrument Design

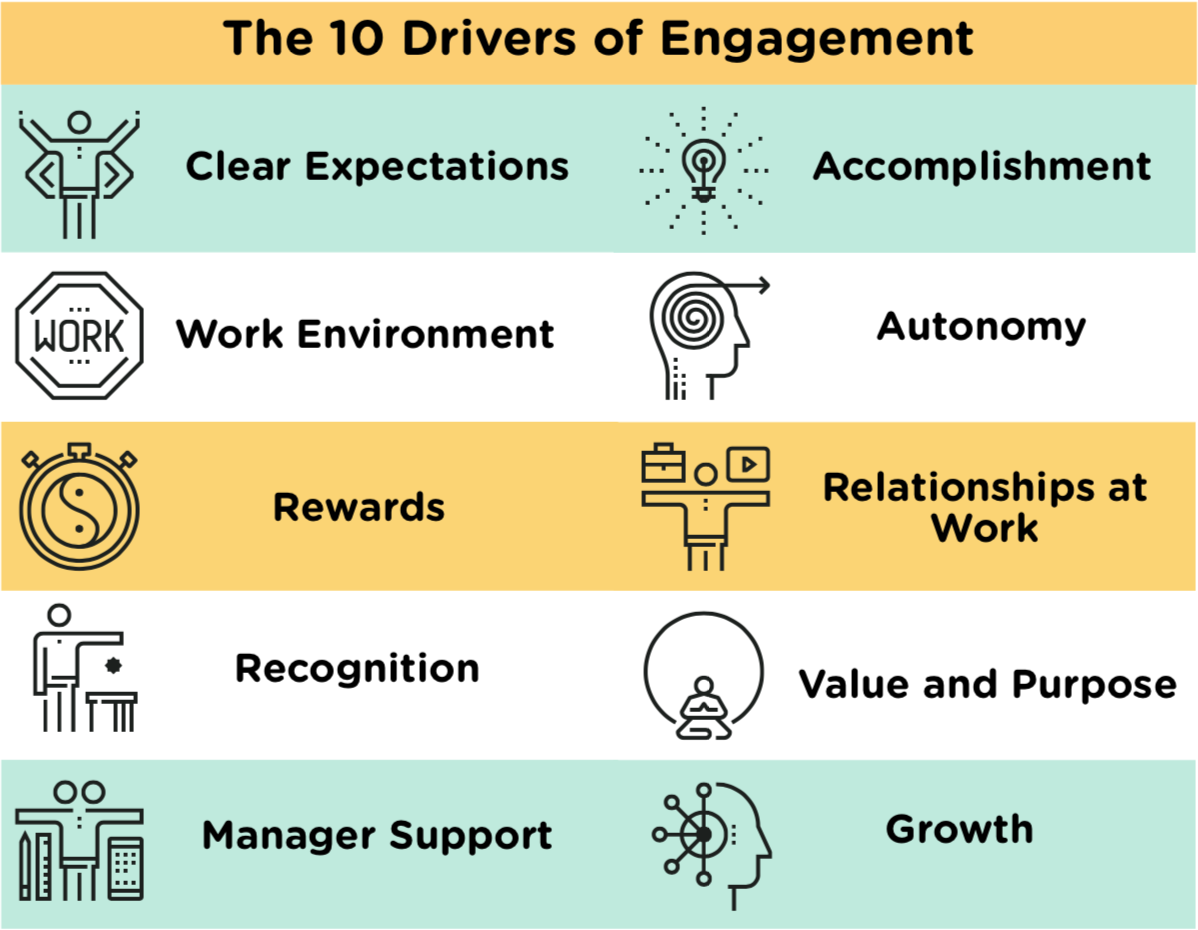

Through our concept mapping and analysis of content from the literature on workplace commitment, we found 10 constructs to measure issues that were actionable for managers and supervisors. Each construct consists of multiple items measuring the perception of different elements of the workplace — autonomy, growth, clear expectations, recognition, relationships at work, manager support, etc.

To build a strong instrument for estimating engagement based on survey responses of these constructs, we needed to select items to include in our Employee Engagement Instrument which were predictive of specific constructs. Thus, we set out on our goal to systematically sample all content that is relevant to the target construct.

Through this process, a total of 42 non-redundant items were generated from the question bank.

Validity Checks

Following research in construct measurement theory, we established a content adequacy test to determine the validity of the selected items. Through this test, items were eliminated that did not discriminate between drivers, or have an average score that was not sufficiently different from other drivers.

Pre-Testing

Survey questions have to be reviewed for comprehension and thus have to be pre-tested on a sample of respondents, to ensure the choice of words or sequencing of the questions does not create misinterpretation.

Participants

We conducted pre-testing by recruiting 20 job incumbents from Singapore. These participants were selected to be representative of the demographic profile of the population of Singapore. All the participants were Singaporeans and were ethnically diverse in proportion to the actual proportions from the 2010 Singapore census. The participants were equally represented in terms of gender. All participants were also proficient in English.

All participants were also representative of different industries in Singapore, such as manufacturing, banking, shipping, hospitality, F&B, automotive, etc. The participants’ education was also similarly profiled, with an equal proportion of O/N Levels certificate holders, A Levels/Diploma certificate holders and Bachelor’s Degree and above certificate holders.

Procedure

Through individual cognitive interviews, participants verbalised their thought processes while answering our beta survey of 42 survey items. Probes were utilised to detect misunderstandings and assess question sensitivity, such as:

Could you tell me in your own words what that question means to you?

Were there questions asked that seemed similar to each other?

Were there any questions that you felt uncomfortable answering?

Summaries of respondents’ verbal reports were reviewed to reveal both general strategies for answering survey questions and difficulties with particular questions. Following the interviews, a debriefing was conducted for respondents to discuss how they answered or interpreted specific questions. After the pre-test, 6 survey items were dropped based on whether the participants had any difficulties in any of the 3 criteria: comprehension, similarity or discomfort.

Pilot Test

Pilot tests are used as a validating step for researchers to understand how a larger sample size of respondents reacts to the survey under full test conditions.

Participants

For the pilot test, 500 job incumbents from Singapore were recruited. These participants were selected to be representative of the demographic profile of the population of Singapore. All the participants were Singaporean and were ethnically diverse in proportion to the actual proportions from the 2010 Singapore census. The sample was composed of 270 males and 230 females, with an average age of 35 within the age group of 24 to 60. All the participants were also proficient in English.

The participants’ education was profiled with a proportion of 10% O/N Levels certificate holders, 60% A Levels/Diploma certificate holders, and 30% Bachelor’s Degree and above certificate holders. All participants were also representative of different industries in Singapore, such as manufacturing, banking, shipping, hospitality, F&B, automotive, etc.

Procedure

The data was collected in a cross-sectional study across employees in a variety of industries. The survey was comprised of the 36 items retained from the pre-testing analyses. Employees rated the degree to which they felt that they experienced the level of engagement represented in each of the items. Responses were made on a Likert-direction scale with the anchors being strongly agree (5) and strongly disagree (1).

Results

Each of the 10 constructs are shown to have a high internal consistency (Cronbach’s Alpha > 0.74), signifying that the measures possess adequate internal consistency reliability. Through the data obtained from the pilot study, items that did not have corrected item-to-total subscale correlations above 0.50 were deleted.

Correlations among the items that structure each subscale (Intra-Subscale Correlations) were systematically higher than items of different subscales (Inter-Subscale Correlations). We also eliminated items that did not match our hypothesis about which dimension they belonged to, leading to a scale with reduced items.

Structural Analysis

We performed a confirmatory factor analysis with latent variable structural equation modeling, using maximum likelihood estimation.

We found that the overall model fit for a second-order structure with 10 constructs as latent indicators of a higher-order engagement factor was very strong.

Conclusion

Through our analysis in deconstructing the factors that impact levels of engagement, we derived 10 distinct drivers of engagement.

For any questions, contact your dedicated Customer Success Manager or email our responsive support team at support@engagerocket.co We're here to help you every step of the way!